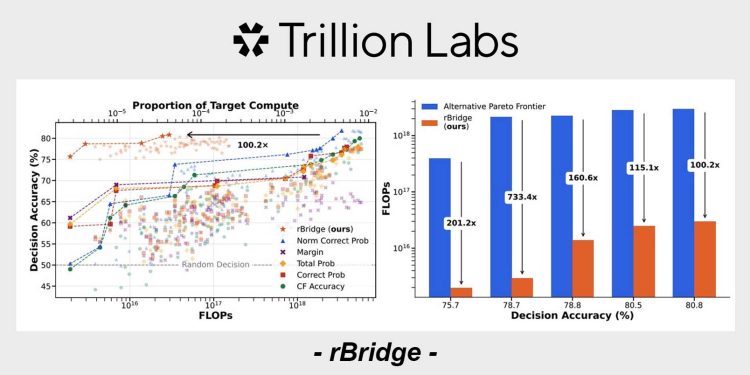

As global AI labs race to scale massive language models, one Korean startup is tackling the cost problem head-on. Trillion Labs’ new rBridge framework offers a way to predict how large models will perform—using only smaller, cheaper ones. The innovation could lower AI research costs by up to 100 times, redefining how startups and labs build competitive AI systems.

Trillion Labs Launches rBridge to Predict Large Model Performance

Following the previous selection for AWS Global Accelerator, Korean AI startup Trillion Labs then announced the release of rBridge on October 21, 2025, a new methodology that predicts the reasoning performance of large language models (LLMs) using small-scale proxy models with under one billion parameters.

Traditionally, training or evaluating a large model—sometimes with tens of billions of parameters—requires enormous GPU resources and cost. The challenge lies in the fact that reasoning capabilities only emerge when a model crosses a certain scale, making small-scale predictions highly unreliable.

Trillion Labs’ rBridge addresses this gap by designing a predictive method that aligns model evaluation with actual learning objectives. This allows smaller models to simulate and forecast the behavior of much larger ones with high accuracy, dramatically cutting down computational expenses.

According to company data, rBridge reduces dataset evaluation and model ranking costs by over 100 times, while achieving up to 733 times higher efficiency than conventional evaluation methods.

Breaking the Scale Barrier in AI Research with rBridge by Trillion Labs

In large-scale AI research, model reasoning tends to improve sharply only after reaching critical mass. This forces both startups and large corporations to train multiple massive models just to find viable configurations—an approach that costs millions of dollars per iteration.

Trillion Labs’ approach provides a scalable alternative. By using small models as predictive proxies, researchers and companies can now test datasets, evaluate architectures, and forecast potential outcomes without direct large-scale training.

The rBridge method has been validated across multiple model sizes, ranging from 100 million to 32 billion parameters, using six reasoning benchmarks such as GSM8K, MATH, ARC-C, MMLU Pro, and HumanEval.

These results show that smaller models can now provide reliable insight into performance scaling trends—an advancement with major implications for both academia and commercial AI development.

A Turning Point in Korea’s LLM Research and AI Development

Shin Jae-min, CEO of Trillion Labs stated,

“This research proves that even small models can reliably predict the reasoning capabilities of large-scale LLMs. It opens a new path for researchers and companies to make data and model design decisions far more efficiently, signaling a turning point in LLM research and the broader AI ecosystem.”

Industry observers note that this kind of predictive modeling could also strengthen Korea’s position in the global AI race, where innovation is increasingly driven not just by model size, but by efficiency, accessibility, and cost-effectiveness.

rBridge by Trillion Labs: Leveling the Field for AI Startups

The release of rBridge by Trillion Labs showcases a potential equalizer for Korea’s entire startup ecosystem. High computational costs have long been a barrier preventing early-stage AI startups from competing with global tech giants.

By enabling researchers to simulate large-model outcomes using smaller models, rBridge could democratize access to advanced AI development, encouraging more startups to experiment, prototype, and contribute to Korea’s growing deep learning research base.

This innovation also reinforces Korea’s AI sovereignty — the nation’s ability to advance AI research independently, without heavy reliance on foreign infrastructure or resources. By reducing the cost and scale requirements for meaningful AI innovation, rBridge empowers domestic startups, research labs, and public institutions to strengthen Korea’s technological self-sufficiency.

Additionally, the initiative aligns closely with the government’s AI Transformation (AX) and Digital Korea strategies, which prioritize AI cost optimization, R&D democratization, and startup participation in national-scale innovation.

If widely adopted, rBridge could further position Korea as a hub for efficient, sustainable, and sovereign AI research, complementing the nation’s increasing investments in semiconductors and model optimization technologies.

A New Direction for Scalable AI Research

The global AI industry is entering an era where efficiency, not just scale, defines leadership. Trillion Labs’ rBridge marks a step toward this shift—proving that smarter, smaller models can unlock the capabilities of massive ones.

This further illustrates how startup-driven innovation can reshape not only AI cost structures but also Korea’s long-term competitiveness and digital independence in next-generation computing. As more research communities adopt similar methods, Korea’s AI ecosystem may finally move closer to inclusive, resource-efficient, and globally trusted innovation.

🤝 Looking to connect with verified Korean companies building globally?

Explore curated company profiles and request direct introductions through beSUCCESS Connect.

– Stay Ahead in Korea’s Startup Scene –

Get real-time insights, funding updates, and policy shifts shaping Korea’s innovation ecosystem.

➡️ Follow KoreaTechDesk on LinkedIn, X (Twitter), Threads, Bluesky, Telegram, Facebook, and WhatsApp Channel.