South Korea is preparing to enforce its AI Basic Law in January 2026, making it the world’s second comprehensive AI framework after the EU. While the government seeks to strengthen trust and safety in AI, startups and academics warn that unclear definitions, compliance burdens, and vague enforcement rules risk stifling growth. The debate now centers on how Korea can balance responsible regulation with global competitiveness.

AI Basic Law Draft Enforcement Decree Released: Concerns Over High-Impact Criteria

The Ministry of Science and ICT recently unveiled a draft enforcement decree for the AI Basic Law. The decree, along with two ministerial ordinances and five guidelines, is set for legislative notice in October and finalization by December 2025.

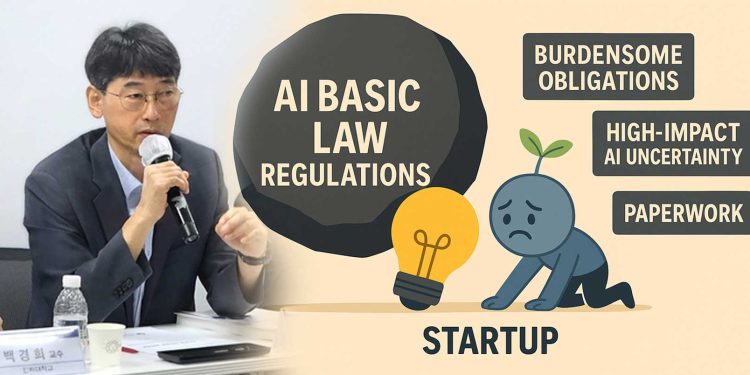

Following the revelation, Korean startups raise particular concern over the classification of “high-impact AI.” Companies are expected to self-assess whether their systems fall into this category, but official confirmation could take up to three months. This uncertainty risks disrupting product timelines and investment decisions.

If designated high-impact, operators must comply with extensive obligations: risk management, explainability, user protection, supervision, documentation, and disclosure for up to five years. And for small firms, such requirements translate into heavy administrative and financial strain.

Generative AI and open-source models face additional complications, as even a single high-risk application may trigger full compliance. Industry experts warn that such rules could inadvertently penalize innovation rather than safeguard users.

How Korea’s AI Basic Law Compares Globally

By legislating the AI Basic Law, Korea follows the EU AI Act, but questions remain over how competitive Korean startups will remain under stricter regulatory demands.

The draft defines “high-performance AI” as systems trained on 10^26 FLOPs or more, requiring enhanced safety measures. These standards, while intended to address risks to human rights and safety, go further than many Asian peers.

For global founders and investors, the outcome will influence Korea’s attractiveness as an AI development hub compared to regional rivals like Singapore and Japan.

Academic and Startup Leaders Call for Clearer AI Rules

Startup Alliance, in a recent issue paper led by Professor Kim Hyun-kyung of SeoulTech, outlined seven recommendations to ease burdens and provide clarity:

- Establish exceptions for non-risk use cases, modifiable results, or academic/artistic purposes.

- Replace self-assessment with a government-led checklist and guarantee the right to appeal or request reevaluation.

- Narrow the broad definition of “AI systems” to those with a clear threshold of autonomy and adaptability.

- Build startup-friendly compliance frameworks with simplified templates.

- Differentiate obligations between developers and service providers.

- Clarify “user” concepts to avoid confusion in B2B2C chains.

- Introduce exceptions to transparency and watermarking rules, especially where technical feasibility remains limited.

Professor Kim Hyun-kyung also emphasized:

“The current draft does not sufficiently reflect industry realities. Forcing companies to self-assess high-impact status creates uncertainty, and delays in government confirmation risk undermining business schedules.”

Korea’s AI Basic Law Debate Could Reshape the Startup Ecosystem

For startups, clarity in regulation is not just a compliance issue but a matter of survival. Heavy documentation requirements could drain resources, while uncertainty around high-impact AI may deter investment.

Korea’s AI Basic Law is also a signal to the global market. By enforcing rigorous but ambiguous standards, Korea risks constraining its innovation edge just as global AI competition accelerates. For founders, investors, and policymakers worldwide, the unfolding debate provides a case study in balancing safety with competitiveness.

If Korea succeeds in refining its framework into a transparent, startup-friendly model, it could strengthen its position as a venture capital hub for AI in Asia. If not, it risks driving talent and capital elsewhere.

A Test for Korean Innovation Policy

The government will collect feedback in October and finalize the decree by year’s end, with the law to take effect on January 22, 2026. In the meantime, a one-year grace period will delay fines, allowing companies time to prepare.

Still, the stakes are high. How Korea resolves tensions between trust-building regulation and startup-driven innovation will shape its AI ecosystem for years to come — and determine whether it remains a leader or falls behind in the global race for AI competitiveness.

– Stay Ahead in Korea’s Startup Scene –

Get real-time insights, funding updates, and policy shifts shaping Korea’s innovation ecosystem.

➡️ Follow KoreaTechDesk on LinkedIn, X (Twitter), Threads, Bluesky, Telegram, Facebook, and WhatsApp Channel.